Qualifying data quality

Last updated on August 26th, 2022

Like many myths, this one has a basis in reality. Artificial intelligence is an incredibly powerful tool that can dig into mountains of data to find nuggets of insight that would be otherwise invisible to human beings. However, this has led to the misconception that AI can easily solve any problem. For all its power, AI is still a tool and, just like other tools, you need to know what you’re working on in order to get the most out of it.

Generic “AI” providers might argue that domain knowledge doesn’t matter because they’re just looking for patterns in the data. If I can use AI to accomplish such diverse tasks as identifying images of cats, beating professional Go players, and predicting engine failures, why would domain knowledge matter?

What is Data Quality?

While the meaning of the term ‘data quality’ is still contentious, we define data quality at Acerta in terms of reliability, traceability, and completeness. Data reliability is by far the most important. It represents the combination of accuracy and precision, such that if your data is reliable, you can be confident that it’s telling you the truth. To put in more concrete terms, you can rely on the fact that when you have a signal that tells you the voltage for a process is five, it is indeed 5V.

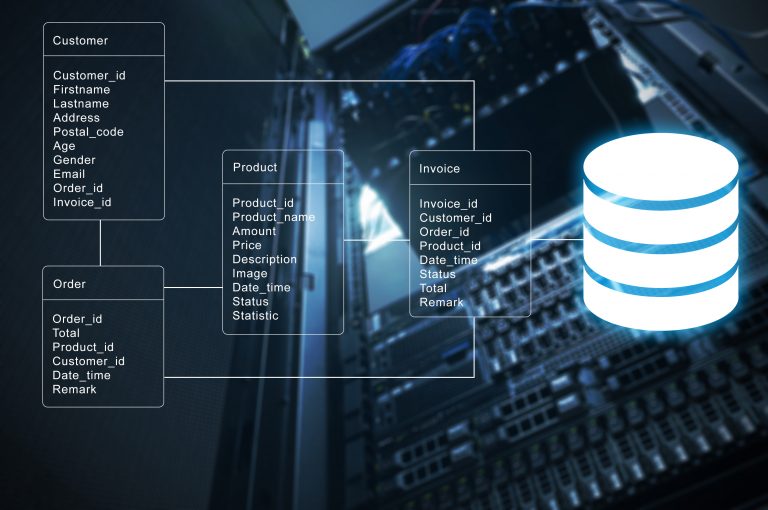

Data traceability concerns visibility into the exact source of a piece of information. Detecting the early indicators of future product failures is only useful if you can tie those indicators to the relevant processes on the factory floor. Suppose you find that variation during an upstream finishing operation is correlated with tolerance stack-up issues in transmission assembly. If the data you’ve been collecting doesn’t distinguish between two separate machines performing the same finishing operation, you’ll still have some work to do.

It should be noted, however, that if we’re talking about the source of some data in terms of database vs. data lake vs data warehouse, Acerta is agnostic. From our perspective, how the data is stored matters much less than the conditions under which it was obtained or its informational content. This brings us to the final component of data quality: completeness. The simple fact is that if you want to predict failures, you need all the relevant information regarding what came before and after failures occur.

In the context of machine learning, invoking a notion of relevance might seem to risk dredging up the frame problem, but really it’s just a question of having the right domain knowledge. If we don’t know the process map for a dataset we get from a manufacturing line, it will probably take longer to analyze. If we don’t know the procedures for reworking a transmission—for example—our assumptions about what we’re looking at could be incorrect. Of course, having data that’s both reliable and traceable can go a long way toward understanding a process map.

Symptoms of Bad Data

The most important thing to remember in discussions of data quality is that it’s not so much a matter of good vs bad, as it is bad vs worse. That’s partly tongue-in-cheek, but it can be helpful for remembering that there is no such thing as perfect quality data. It’s a bit like evolutionary biology, where an organism’s “fitness” isn’t an inherent attribute—fitness has to be defined in relation to a particular purpose.

Nevertheless, there are some signs that are almost universally indicative of poor quality data.

For example, if you calculate the missing values and find that the percentage is relatively high, that’s a symptom of bad data. If there are metadata issues—your column names are confusing or just plain bizarre—you might have bad data. Similarly, if the information content seems “crazy”, that’s another symptom of bad data.

We’ve seen all these symptoms at Acerta—voltages labelled as braking forces, GPS data suggesting vehicles are travelling three times around the Earth in less than an hour, velocities fluctuating so rapidly that the change in kinetic energy would cause the vehicle to explode. In fact, our data scientists have built tools to check the sanity of client data specifically for cases like these.

Improving Data Quality

If you’ve made it this far in the post, you might have found the preceding examples of bad data uncomfortably familiar. For those in manufacturing who are worried about the quality of their data, the good news is that there are simple steps you can take to make improvements. Volume is the most obvious, as a good dataset is generally a large one.

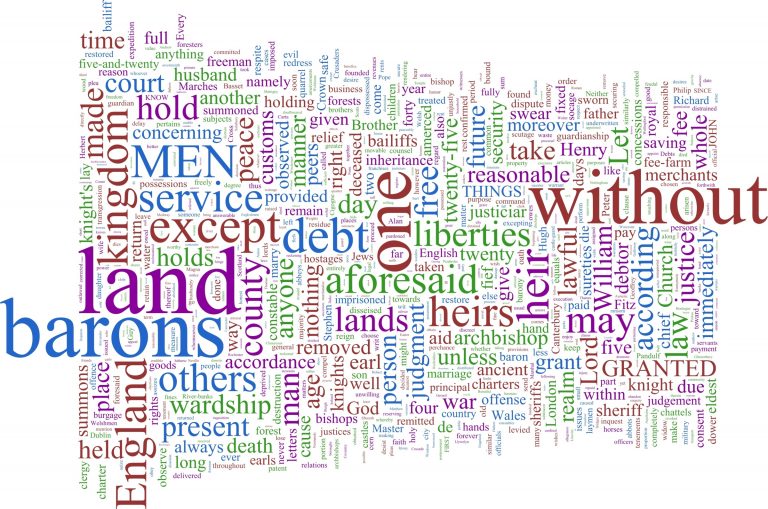

However, you should avoid falling into the trap of thinking that all you need to do to improve your data quality is to collect a lot more of it. This goes back to the distinction between data and information. The amount of information in a dataset depends on the amount of variation in the signals; if you have signals that don’t vary a lot, they’re probably not information-bearing. Think of it this way: the volume of data in the Magna Carta and a document with the word ‘buffalo’ repeated 4,478 times is the same, though they differ massively in information content.

A better approach to improving data quality is to start by asking who will be using the data you’re collecting and to what purpose. Once those stakeholders are identified, allow them to guide your data collection. Ideally, you’ll have a “data governor” (or maybe a Speaker for the Data), who can advocate on behalf of data quality.

In one of our best experiences with client data, we received a separate document that explained all the columns, the conditions during data collection, and even some observations from their engineers. In other words, better labelling is one of the best ways to improve data quality. To that end, movement toward OS and middleware standardization in the auto industry—from organizations such as GENIVI—is a welcome development.

Share on social: