Alan Tan: Ethical AI in Automotive Manufacturing

Last updated on June 19th, 2023

Alan Tan, CTO of Acerta, participated in a fireside chat at Toronto’s Elevate Tech Festival in September. He talked with the AI Stage host, Danielle Gifford, about the practical uses of industrial AI and introduced the concept of “ethical AI”. Here’s a deeper dive into ethical AI and how it applies to manufacturing and robotics.

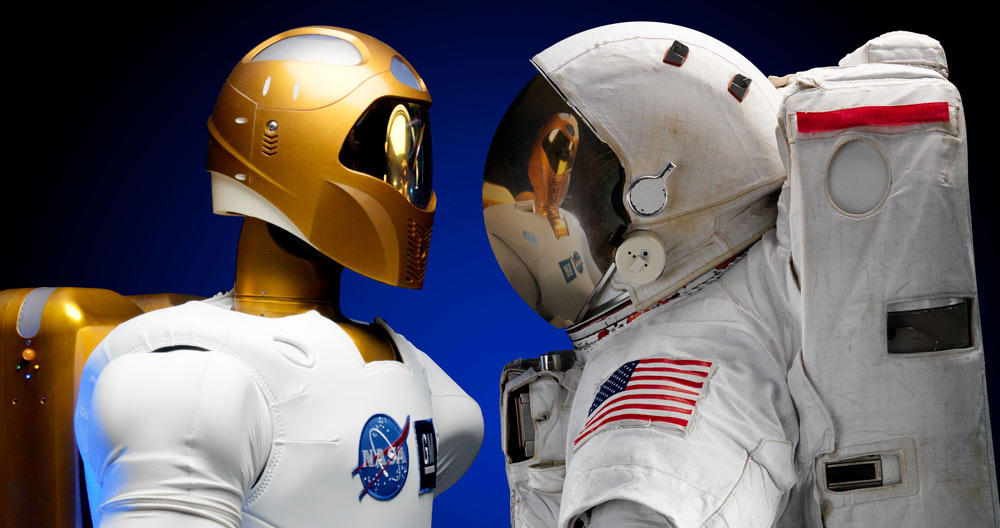

Is AI here to replace humans?

The rapid development of computer technology has given rise to many fears about the role that computers play in our lives. One of the most contentious technologies today is artificial intelligence (AI). People ask: who has access to AI, what is it used for, who controls it, or even more frightening, does it control itself?

There is concern that artificial intelligence is on a collision-course to replace us in work, in art, and even in relationships. Rest assured, today’s artificial intelligence is not advanced enough to replace human beings. Despite the growing adoption of the technology, it still has limits. The human mind, combined with the human body’s ability to take in sensory information, still holds an edge over AI in many ways.

When we think of the role that artificial intelligence plays today, it is important to understand that it exists not as a standalone actor, but in an ecosystem that contains any number of physical objects, systems, and human beings. Artificial intelligence supplements human capabilities, and human beings can supplement AI by directing new inputs to be processed, and by altering algorithms.

Ethical AI assists human capabilities

It is through this lens that we can understand the implications behind the phrase, “Ethical AI”. Ethical AI aims to assist humans with challenging or inefficient work, find clarity and solutions to problems quickly, enhance understanding, keep us safe, and even help to protect the environment. The phrase implies that “ethics” is contained within the algorithms themselves, but it is critical to remember that AI exists in an ecosystem, and the many actors within it can influence how ethically the AI is used. Like a knife, which can be used for preparing a nourishing meal or doing physical harm to a person, AI is simply a tool that can be used for good or for bad.

Ethical AI in automotive manufacturing

Some examples of ethical AI can be understood by thinking about the automotive manufacturing industry. Acerta is utilizing AI and machine learning to help automotive manufacturers produce higher-quality vehicle parts faster and more efficiently. By doing this, we have seen the following positive impacts on the ecosystem in this particular AI application:

- The job of workers on the manufacturing line is made simpler when they are alerted of patterns that help identify defects in parts before they occur.

- End customers (i.e., vehicle owners) are safer on the road because the components in their vehicles are made more accurately to specification, reducing the risk of malfunction or warranty concerns.

- The amount of industrial waste in the factory is reduced: less scrap metal goes to waste, and less power and other resources are required to produce the same number of parts.

Not all AI is ethical…

Another way to think about ethical AI is to think about the opposite: unethical AI. It is well understood that social media and gaming platforms spend incredible time and money on creating addictive patterns in their apps that keep people’s attention hooked for as long as possible, despite negative effects on mental and physical health. This is certainly an ethical grey area, if not an outright unethical practice depending on the company’s motives and use of it.

Artificial intelligence has been created and tested thoroughly in academic settings, where biases are considered and attempts are made to control outside factors and changing variables. But when powerful tools are brought into the world, there is an opportunity for mishandling.

A clear example of an unethical application of AI happens in resume selection during the hiring process. When a large company receives many applications for a position, they sometimes use AI tools that scan resumes and filter out candidates the AI determines will not be good fits for the position. These determinations can be drawn from specific human input, which has its own biases. The AI could also “learn” specifications through analyzing the resumes of previously successful candidates, then picking out patterns in the resumes that correspond to the past resumes of those who were previously hired. But, what if all the previously hired candidates happen to be all white, all male, or have all gone to prestigious schools? Some of the factors that the AI considers could be a product of systemic bias rather than true merit. Here we can see how AI can act “unethically”, although it may argue back that it was just doing its job.

Human-friendly AI

Considering these examples, it is clear that a strong case should be made for ethical AI. Aside from carefully considering the intention and execution of AI, another important part is to make AI human-friendly. Just like the computer itself, AI must be designed to be understood by humans, and not reserved for cloistered intellectuals.

Human beings have a strong ability to recall data, process new data, analyze, and take appropriate action. Artificial intelligence is limited in approximating unexpected forms of data and determining the correct course of action. If the analysis presented is not actionable or lacks specificity in how to take action, then the tool is not being used effectively.

Let’s think about another manufacturing example: Acerta’s LinePulse collects data from manufacturing lines and performs advanced analytics on it in real time to help line operators take action to prevent defects. The analysis being presented to the users may do a great job at explaining the problem, but if the user does not understood clearly what action to take, this is a problem.

However, giving AI the ability to draw conclusions and recommend actions based on the analysis empowers the line operators to thoroughly utilize the value of these insights. Conversely, the data engineers at the manufacturing plant may be looking for other trends in the data, or have a stronger need to dive deeper and understand “why” problems occur. They don’t need to be instructed to stop a machine – they need to have access to objective data that they can use to provide an extra layer of human comprehension and recommend more strategic business decisions, e.g., do we need to purchase new equipment, or should we set up another assembly line?

LinePulse was built to incorporate familiar patterns of visualization and ways of presenting that data from Statistical Process Control (SPC) systems. Because the AI uses a familiar “language” to communicate with manufacturing workers, it is more likely to be understood. This is an example of human-friendly AI.

It is worth mentioning that the ecosystem of AI is rarely static. New human users may join, current users grow and change, physical parts such as manufacturing machines are replaced or change in the way they function over time. New sensor data may be added. AI must be flexible enough to be designed for a changing world. AI that is outdated, cumbersome, and disconnected from the real world becomes less ethical because it is no longer improving our lives.

Alan's ethical AI chat

Share on social: